I am running a selenium job in a nomad cluster. Currently there are no custom meta information on the client side so I am trying to solve the job constraint for selenium (access to /dev/shm) with the existing information from nomad.

The message I got when starting the job:

### selenium-hub 1 unplaced

* Constraint `computed class ineligible` filtered 4 nodes

### selenium-nodes 1 unplaced

* Constraint `${driver.docker.volumes.enabled} = true` filtered 4 nodes

The job definition (terraform template) with the constraint section looks like this:

job "${job_vars.stage}-selenium-hub" {

region = "global"

datacenters = ["eu-central-1"]

type = "service"

priority = 50

constraint {

attribute = "$${driver.docker.volumes.enabled}"

value = "true"

}

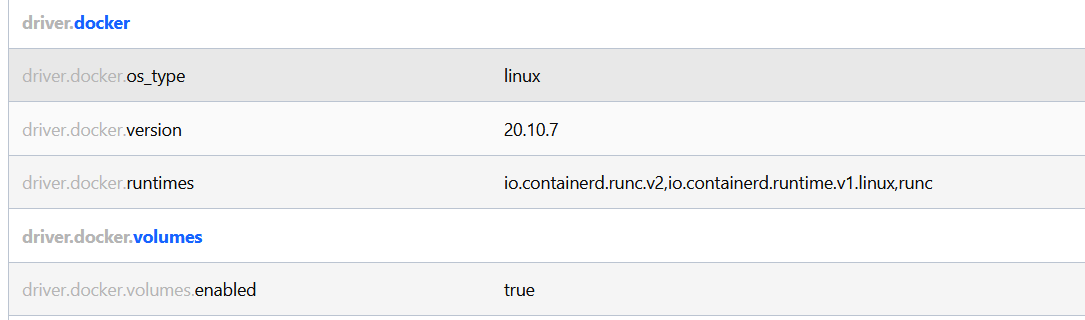

The nomad UI shows one client node that has this properties set:

When replacing the literal “true” with HCL boolean the error message changes slightly:

### selenium-hub 1 unplaced

* Constraint `${driver.docker.volumes.enabled} = 1` filtered 4 nodes

Hi @gthieleb ,

Thanks for using Nomad!

Couple of questions for you.

Do you have the following on your clients?

client {

options = {

"docker.volumes.enabled" = true

}

}

Are you using Levant per-chance?

Can you post your full job and agent config (with secrets redacted)?

Hi Derek,

At the bottom is the full nomad job.

I played a bit with the syntax of constraint trying both

attribute = "driver.docker.volumes.enabled"

and

attribute = "$${driver.docker.volumes.enabled}"

You can see in OT post that one linux node has volumes enabled. Here is the config:

sudo cat /etc/nomad.d/nomad*

plugin "docker" {

config {

volumes {

enabled = true

}

}

}

datacenter = "eu-central-1"

data_dir = "/opt/nomad/data"

bind_addr = "0.0.0.0"

# Enable the client

client {

enabled = true

}

consul {

address = "127.0.0.1:8500"

token = "********************"

}

What is levant?

Here you are the full job config

job "${job_vars.stage}-selenium" {

region = "global"

datacenters = ["eu-central-1"]

type = "service"

priority = 50

group "selenium" {

count = 1

constraint {

attribute = "driver.docker.volumes.enabled"

value = true

}

network {

port "selenium_hub" {

to = 4444

static = 4444

}

}

task "selenium-hub" {

driver = "docker"

config {

image = "selenium/hub:3.141.59-20210713"

ports = ["selenium_hub"]

}

env {

GRID_UNREGISTER_IF_STILL_DOWN_AFTER = 4000

GRID_NODE_POLLING = 2000

GRID_CLEAN_UP_CYCLE = 2000

GRID_TIMEOUT = 10000

}

service {

name = "selenium-hub"

tags = ["${job_vars.stage}", "selenium", "hub"]

port = "selenium_hub"

check {

name = "alive"

type = "tcp"

interval = "10s"

timeout = "2s"

}

}

resources {

cpu = 1000

memory = 1024

}

logs {

max_files = 10

max_file_size = 15

}

kill_timeout = "20s"

}

}

group "selenium-nodes" {

count = 1

restart {

attempts = 10

interval = "5m"

delay = "25s"

mode = "delay"

}

network {

port "selenium_node" {

to = 5555

static = 5555

}

}

task "selenium-node" {

driver = "docker"

template {

data = <<EOH

HUB_HOST = "{{ with service "selenium-hub" }}{{ with index . 0 }}{{.Address}}{{ end }}{{ end }}"

SE_EVENT_BUS_HOST = "{{ with service "selenium-hub" }}{{ with index . 0 }}{{.Address}}{{ end }}{{ end }}"

SE_NODE_HOST = "$${NOMAD_IP_selenium_node}"

EOH

env = true

destination = "local/env"

}

config {

shm_size = 2097152

ports = ["selenium_node"]

image = "selenium/node-chrome:3.141.59-20210713"

volumes = ["/dev/shm:/dev/shm"]

}

service {

name = "selenium-node"

tags = ["${job_vars.stage}", "selenium", "node", "chrome"]

}

resources {

cpu = 1000

memory = 4096

}

logs {

max_files = 10

max_file_size = 15

}

kill_timeout = "20s"

}

}

}

A shot in the dark, but could it be:

attr.driver.docker.volumes.enabled ?

In the past I faced a similar documentation issue:

hashicorp:main ← shantanugadgil:patch-4

opened 03:18AM - 06 Jul 21 UTC

Between this page and https://www.nomadproject.io/docs/runtime/interpolation

I … realized that the syntax on the Docker page was missing the word `attr`.

Nomad version: 1.1.2

1 Like

Good news! (sort of) I’ve been able to reproduce this on my end. I’ll start to digging into what we’re missing in this config here and get back to you.

So this works for me. @shantanugadgil was spot on. You do need the ${} around the entry, and you also need the attr. segment.

constraint {

attribute = "${attr.driver.docker.volumes.enabled}"

value = true

}

Wait … the bare worded method doesn’t work ? (due to HCL2)?

attribute = attr.driver.docker.volumes.enabled

I didn’t actually try. Out of habit, I just used the ${}. You are correct. The bare string works too. Looks like the issue was just the missing attr segment.

1 Like